最近我在公司上线了rabbitmq,替换了原来阿里出的rocketmq(别说我黑阿里的东西,这玩意真的都是坑),我并不想告诉你rabbitmq安装过程是怎么样的,去看官网就知道,戳这里

看看网上说rabbitmq效率多高多高,但是怎么测试也只有15000Qps,还是用golang的客户端来测试访问的中间没有任何处理逻辑,悲催的是java原生客户端只能跑到11000Qps,(好吧,我承认我也黑了java),看似基本上这个问题也不大,但是我相信优化的空间应该还是蛮大的,所以做了一下测试,先上代码吧:

|

|

说说我的测试环境,两台vm安装rabbitmq做集群,配置是CPU:2core,mem:2GB,disk:187G sata

客户端是我的macpro,雷电口转千兆网卡接入测试网络.但是据说中间有一个交换机是百兆的(哭死),所以只能按照百兆连接来计算.

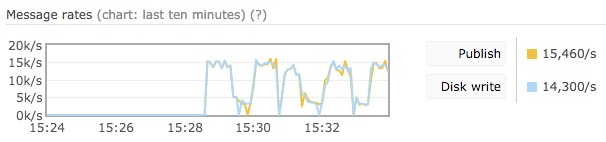

测试一:

测试内容:

启动一个生产者,无消费者

配置:

|

|

结果:

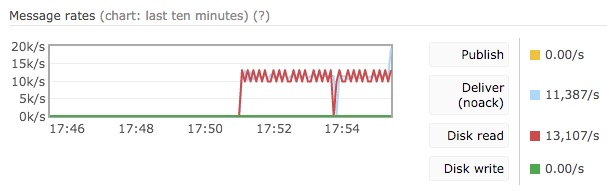

自己看图,基本上不用我解释

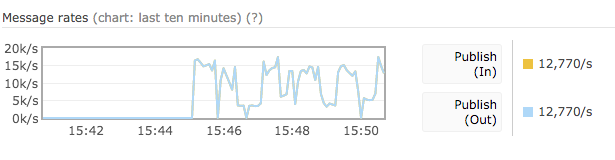

测试二:

测试内容:

启动三个生产者,无消费者

配置:

|

|

结果:

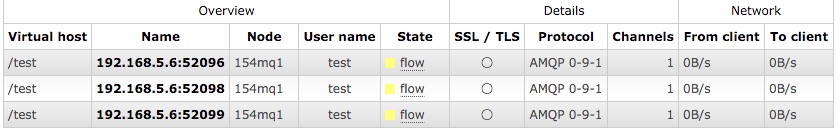

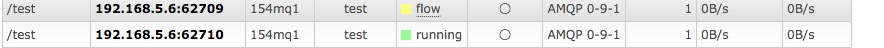

另外我还发现mq已经启动了流控,看来他已经认为我发送过快.

由此可见生产者的个数对整体的消息publish没有太大的影响,至少在单机的情况下是这样,而且publish的时候会一直抖动,不会一直保持在一个范围内,应该是流控机制引起的,具体怎么优化流控还请各位大牛指教,我暂时还没有什么头绪.

测试三:

测试内容:

启动无生产者,一个消费者,Qos为0.默认Ack,接收到消息马上返回,不休眠

配置:

|

|

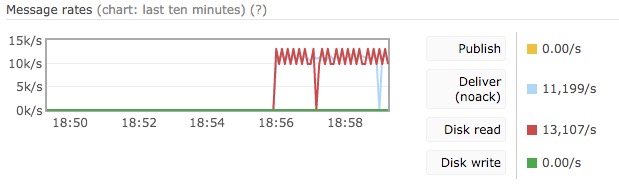

结果:

测试四:

测试内容:

启动无产者,一个消费者,Qos为1.默认Ack,接收到消息马上返回,不休眠

配置:

|

|

结果:

貌似这里没有什么差别,消费能力都是在10k/s左右,看来Qos设置为1还是不设置并不影响消费能力,可以吧Qos加大看看效果.

测试五:

测试内容:

启动无产者,一个消费者,Qos为10.默认Ack,接收到消息马上返回,不模拟业务处理时间

配置:

|

|

结果:

这里Qos的增加并没有对消费效率产生影响,其实这是说得通的,Qos本质是控制consumer处理消息的缓存大小,

==但是如果在网络比较差得情况下Qos=1和Qos=10对消费会有很大的差异==.

例如,消息从mq传递到consumer需要50ms,处理只需要5ms的时候,如果Qos=1,那么就必须等到这条消息消费完了再分配下一条消息,这样一条消息处理的整体时间是50*2+5=105ms,但是如果这时Qos=10的话,相当于当一条消息到达之后不用等消息处理完,可以就再分配下一条消息,这样基本上保证时时刻刻都有消息都消费,不需要等待网络传输的时间当缓存消息达到10条的时候正好可以吧传输的50ms冲抵掉.

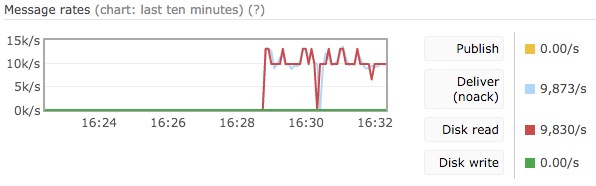

今天最最后一个测试,就是publish对consumer的影响到底多大.

测试六:

测试内容:

启动一个产者,一个消费者,Qos为10.默认Ack,接收到消息马上返回,不模拟业务处理时间

配置:

|

|

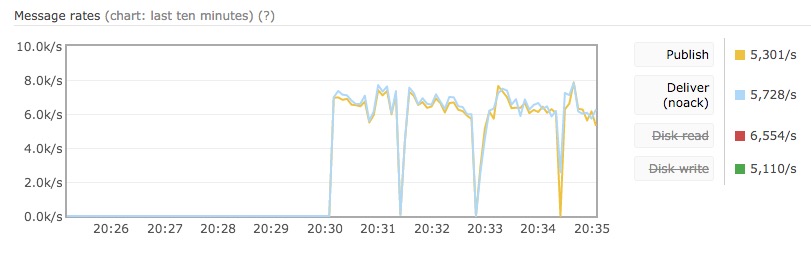

结果:

当然已经触发了流控的,如下图:

流控机制可以自行google,这里不做描述

看来consumer与publish同时工作的话还是有影响的,这种影响到底有多大,因素有哪些,就这一个测试当然不能说明,由于今天时间比较晚了,明天继续.